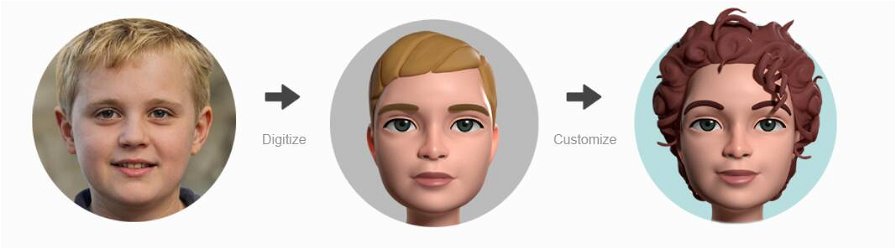

Hao Li is an innovator in the fields of computer vision and computer graphics. Associate professor at University of Southern California, collaborators of studios like Weta Digital and Industrial Light and Magic, researcher and entrepreneur, is one of the most influential figure in the digital humans field. From his research, Apple created Animojis introduced in iPhone. He worked, among other things, to build a digital Paul Walker when he prematurely passed away for the shooting of Fast and Furious 7, and creates digital avatars with his company Pinscreen. Why Hao Li is also an important voice on the risks coming from these technologies, like deepfakes?

This Sunday, March 2021, Hao Li will talk at VFXRio Live on the topics of digital humans, artificial intelligence and the future of this technology. The event starts at 3 pm local time (Brazil) and can be watched for free by registering at this address. We could interview exclusively Hao Li on these topics.

Seeing is (not) believing

Many of his publications explore technologies to build 3D faces starting from simple pictures using deep learning and neural networks. At the same time, many of his speeches, like his keynote at World Economic Forum in Davos, deal with the problem of deepfakes, the use of the same technologies to create videos and pictures using the appearance of unwilling people. Hao Li doesn't believe that there is a contradiction between these messages.

We must take responsibility of how we use these technologies. We can't just stopping using them, because someone else will use them anyway. The right thing to do, I think, is make sure it doesn't get out of control. We have been discussing this with many other researchers who are at the forefront at this type of technologies, and one thing that was very obvious was the need to educate the public as the first step to “inoculate” it so that people will be able to protect themselves.

Hao Li pains a picture that isn't very far away from the realm of possible: what if I'm not talking with the real Hao Li? What if someone had hacked into his e-mail account and joined the videoconference using real-time deepfakes technology? How could I know? Knowing that this can happen is a first step to raise my level of attention, but surely it can't be enough. Indeed, Hao Li continues:

Sure, knowing the risk is just not enough. First of all, it's possible to create content practically undetectable. I say "practically undetectable" because detection technology will catch up eventually, but to the naked eye it will impossible to detect.An important thing, I believe, is the place where this type of media is consumed – like social media that are the place where people look for news. There are places like TikTok or Snapchat where everyone can upload anything, the content is not verified but can spread very quickly. I think, though, that we are already moving in the right direction, with people that start flagging suspicious content, not just deepfakes, but fake news in general. But like we need this capability to flag something when it's already circulating, so we have the need of a technology that can automatically flag, without the need of humans, if a content is suspicious or if there is a chance that it has been manipulated, so that someone can have a look at it before it starts spreading.

Truer than true

Important groups like DARPA (Defense Advanced Research Project Agency) kicked off projects like SemaFor (Semantic Forensic) – with Hao Li as a collaborator – to predict and face these next generation threats, like more sophisticated AI manipulation technologies, or new types of deepfakes that don't deal with video but that may take the form of an image or a frame, around which a certain narrative can be spread, to manipulate options. Hao says:

I believe that soon technologies will reach a point where generated content will be impossible to detect, but we have to consider then the effort to create such content. The goal should be to make it as difficult as possible, to prevent this type of things from spreading at scale. It's like spam e-mails: the are circulating since 20 year, they can't be stopped for good. We can filter most of them, though, and people is somehow "inoculated" against their threats.

Democratization of CGI

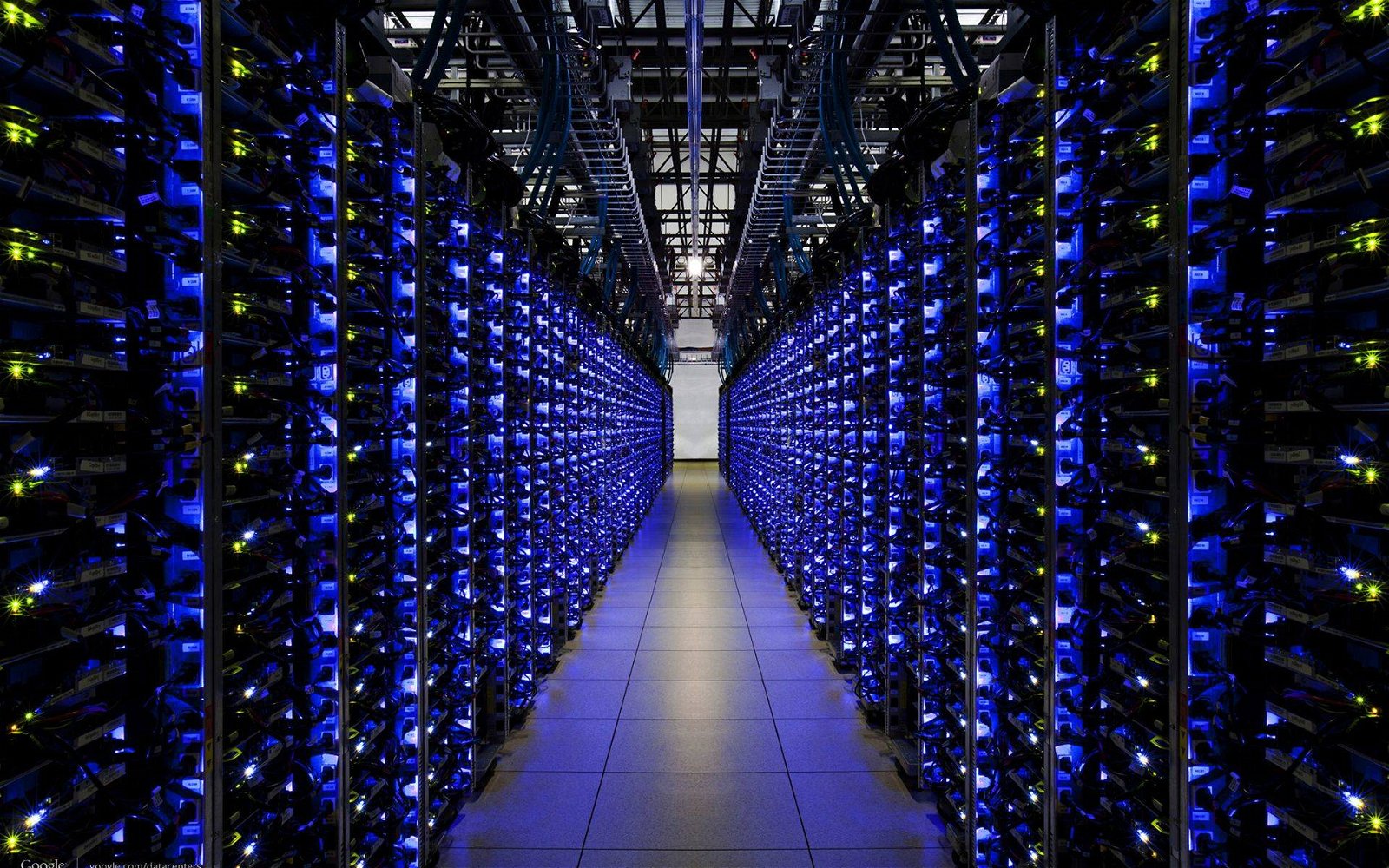

In entertainment, effort to make photorealistic images is still huge. What they call VFX pipeline is actually an assembly line of programmers, digital artists, motion capture and very long times to elaborate a single frame. Days of works for a handful of frame like in the case of Fast and Furious 7. At the same time, companies like Pinscreen invent new algorithms to get better and better looking results replacing the standard methods of CGI with neural networks and deep learning, even in real-time thanks to available (and growing) processing power. We asked Hao Li if traditional CGI's time is running out.

Not in short time. CGI is still very important for everything that must be rendered, for everything that is 3D. I see that deep learning will complement CGI and in a sense it's what we're already doing. We are building hybrid systems, where creatives still have the same control of CGI, manipulating design content with full control. Deep learning based approaches can help by solving problems that are very hard, like for example generating a scene that seems immediately real because it's based on real data. In the long run, though, I see the possibility that everything will be replaced by neural rendering. There are already advanced algorithms: last year a paper was published with a novel algorithm called Neural Radiance Fields (NeRF) that lets you skip the entire standard rendering process and build a 3D object starting from a series a pictures. These methods have still many limits, like time to generate a single image – even if we recently submitted a paper to demonstrate that this can be done in real-time, very fast. And this is just the beginning.

The copyright of one's own image

If all it takes is an image (or an handful of images) to generate a 3D model of a scene or of a person, should we talk about some form of personal copyright? Maybe an NFT to track the ownership of your own appearance. Hao Li sounds skeptic:

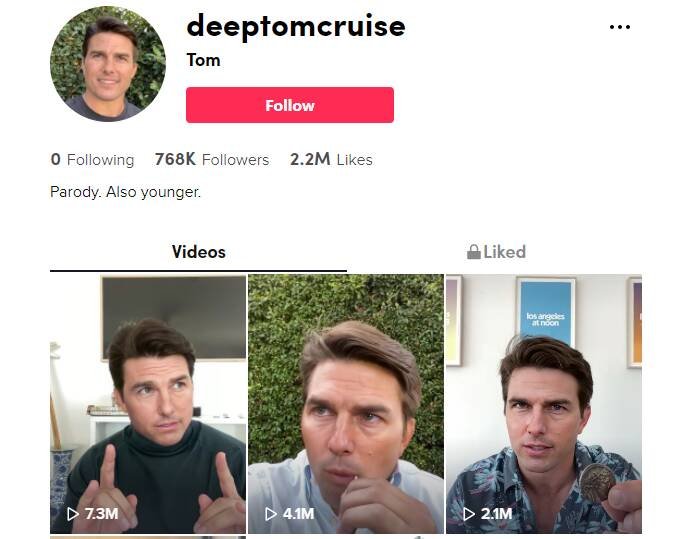

You can't control it. Like every digital object, even if you can use methods to control distribution or authentication, once you can see it, you can clone it. One thing that may make sense, I think, is to have regulations, but cloning someone is not necessarily harmful. It can be used to create something harmful, for example to have you say something that you wouldn't like; but there are also things like deepTomCruise on TikTok: is it really that bad? It doesn't harm Tom Cruise, it even makes him more popular. Then there's the thing where you make fun things with politicians, and that'something related to free speech. Anyone can write whatever they want, even if sometimes this capability is used to write evil things and to manage someone else, but free speech it's a right nonetheless. In the end, it comes down to the platform where these type of things are circulating, to check things out. Can you really prevent someone to steal someone else's identity? It's definitely something we should be concerned about, given that it's very easy to do and to use to make evil things. It's very difficult to control fom a technical standpoint: even simpler systems like watermarking images or encrypting e-mail don't get easily adopted because of the extra effort required.

Virtual shop assistants and digital models

Digital avatars, the focus of Pinscreen, are not new: in the sci-fi and cyberpunk imaginary we have already see virtual avatars: from the giant advertisement holograms in Blade Runner and Blade Runner 2049 to the virtual librarian played by Orlando Jones in The Time Machine. Even if in sci-fi fiction the digital interaction is often depicted as cold and impersonal, Hao Li thinks that we already have many examples of positive interactions around us:

There are places where these interactions are not dystopic, like with virtual influencers. Brud, with Lil Miquela – a model, musical artist and Instagram influencer with 1 million followers, entirely generated in computer graphics – is making something that people find very appealing, and Brazil has a very popular virtual influencer, I think. I believe these things have already been commoditized and have a good acceptance because they have an edge over real people, they are very interesting at this time. Some of them are purposefully less real: specially in the fashion world, if you are edgy or you are different, it's something that you can use to your advantage, In a sense, they can be somewhat dystopic, they can have crazy looks, but in this case it's an artistic statement. We are currently working with one of the top fashion companies in Japan, Zozo, to create virtual influencers . We are doing expeiment, we started with static imagines, but next week we will add videos to demonstrate that we can build video content at scale with virtual influences, using some of the AI synthesis techniques we developed for face digitalization. In fact you can see that faces look different than traditional CGI faces.

View this post on Instagram

The real telepresence

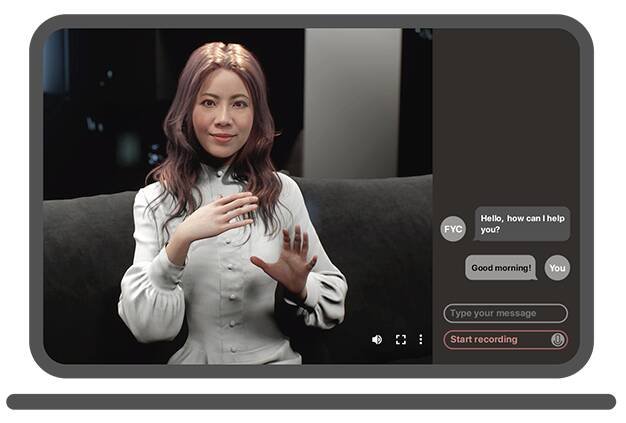

The future of commerce, especially e-commerce, will necessarily pass through digital humans, or virtual beings, as a natural evolution of today's chatbots. The same technologies that make virtual beings more realistic can be used also to improve virtual communication, thanks to technologies that allow to digitize faces with all expressions and transfer them to the virtual world, like with Animojis. At the same time is important to transfer the interaction from the virtual world to the real world, from seeing other digital humans o virtual assistants, to haptics devices to provide tactile feelings. Hao Li explains it like this:

Most of the work we are doing now focuses on skilled content creation or on human-machine interface, like interacting with a virtual assisant [Hao Li gave an example interacting with a digitized version of his wife for a Virtual Beings summit. In future the focus will be on communication, bringing people virtually in the same room. Microsoft Hololens has a nice concept video showing people getting digitally teleported in other rooms. This is the future that is coming, starting from the professional sector in the next 5 years already and then moving to consumer world, and there will be more need of digital humans. Obviously we don't want to focus only on faces, but on the whole body language and on the physical interactions. WE will need a combination of hardware technologies, from wearable sensors to eye tracking systems, that are still in a very early stage of development: if you think at VR/AR systems, they are still very bulky; holograms are still at a very early stage of research. Potentially we will see many solutions arriving, and they must arrive because we live in a tridimensional world, and moving to a digital world we simply can't ignore its 3d aspect

These topics are very actual, with lockdown orders, curfews and home working - as Matteo Moriconi, director of VFXRio Live and president of Brazilian Association of Visual Technology notes. Hao Li eagerly confirms:

Pandemic surely accelerated many thought is this sense. In Pinscreen, before this people were saying "why should I have an avatar? It's a nice to have", but now they say "why don't we have it yet?!?" Think about school and distance learning: we are limited by a 2D screen, a chat and often we don't turn the camera on, there's no way this can be effective. All these things have triggered people to think about virtual presence and digital avatars. PWC London already started a pilot program by giving VR sets to its employee in home working and allowing them to participate to 2-3 meetings per week with VR, with very good results.

Hao concludes:

It's a core aspect of human nature, we are social animals and we have a need of interacting daily with other people.

And we thank him for what he does to make virtual interactions more...human.